- #Macports install package archive#

- #Macports install package code#

The output is flushed in much smaller chunks without any buffering, which makes HTTPie behave kind of like tail -f for URLs. You can use the -stream, -S flag to make two things happen: However, when colors and formatting are applied, the whole response is buffered and only then processed at once. This allows for streaming and large file downloads without using too much memory. Responses are downloaded and printed in chunks. Accept-Encoding can’t be set with -download. #Macports install package code#

HTTPie exits with status code 1 (error) if the body hasn’t been fully downloaded. -download also implies -check-status (error HTTP status will result in a non-zero exist static code). -download always implies -follow (redirects are followed). You can still set custom headers, use sessions, -verbose, -v, etc. The -download option only changes how the response body is treated. dco is shorthand for -download -continue -output. There are multiple useful ways to use piping: Or -compress, -x to compress the request body. You may also use -chunked to enable streaming via chunked transfer encoding If you provide Content-Length, then the request body is streamed without buffering. The universal method for passing request data is through redirected stdinīy default, stdin data is buffered and then with no further processing used as the request body. There are three methods for passing raw request data: piping via stdin, These two approaches for specifying request data (i.e., structured and raw) cannot be combined. In addition to crafting structured JSON and forms requests with the request items syntax, you can provide a raw request body that will be sent without further processing. The response headers are downloaded always, even if they are not part of the output Raw request body Therefore, bandwidth and time isn’t wasted downloading the body which you don’t care about. Since you are only printing the HTTP headers here, the connection to the server is closed as soon as all the response headers have been received. For example instead of using a static string as the value for some header, you can use operator Using file contents as values for specific fields is a very common use case, which can be achieved through adding the suffix to Raw request body is a mechanism for passing arbitrary request data. Note that the structured data fields aren’t the only way to specify request data: For example or With -form, the presence of a file field results in a -multipart request Useful when sending JSON and one or more fields need to be a Boolean, Number, nested Object, or an Array, e.g., meals:='' or pies:= (note the quotes)įile upload fields available with -form, -f and -multipart. Request data fields to be serialized as a JSON object (default), to be form-encoded (with -form, -f), or to be serialized as multipart/form-data (with -multipart)

X-API-Token:123Īppends the given name/value pair as a querystring parameter to the URL.

The current user experience is more similar to binary managers like apt-get, with the ability to change configure and build options quite easily.Arbitrary HTTP header, e.g.

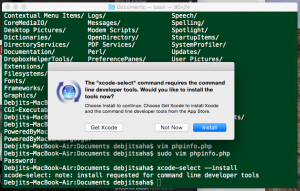

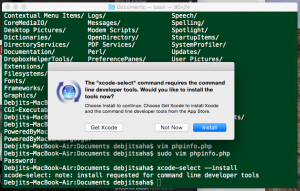

Changelog- there's a macosforge supported repository with buildbots that produce signed archives and the default is to fetch these binary archives rather than building from source (that you can force using -s flag). That said I see the question is quite old, since 2.0 there's support for binary archives -cf. If you experience a huge difference when building a program outside of MP you should file a ticket on the issue tracker with the details. MacPorts uses Apple's tools to build and it only adds a negligible overhead to the same build time that you would get outside of MacPorts, the bigger the package, the smaller the difference.

#Macports install package archive#

MacPorts used to only build from source and this can lead to a difference of several orders of magnitudo when compared to a package system that fetch binaries.Ĭonsider as example the case of a somehow big package that takes few hours to be built and compare this to the time of downloading it as an archive having a size of a few tens of MBs.

0 kommentar(er)

0 kommentar(er)